Psychometric Properties of the Jouve Cerebrals Word Similarities Test: An Evaluation of Vocabulary and Verbal Reasoning Abilities

The Jouve Cerebrals Word Similarities (JCWS) test is a self-administered verbal test designed to evaluate vocabulary and reasoning in a verbal context. This paper presents an analysis of the psychometric properties of the JCWS, with a focus on the first subtest, which is based on the Cerebrals Contest's Word Similarities (CCWS). The CCWS demonstrated high reliability, with a Cronbach's alpha coefficient of .960, and steep discrimination curves for each item. Validity of the CCWS was assessed through correlations with WAIS scores, and found to be a measure of verbal-crystallized ability. The JCWS subtests demonstrated high internal consistency and reliability, with a Spearman-Brown prophecy coefficient of .988. The paper concludes that the JCWS is a reliable measure of vocabulary and reasoning in a verbal context, with high internal consistency and reliability. However, limitations of this study include a small sample size for assessing internal consistency and concurrent validity for the whole JCWS. Future research should aim to address these limitations and further evaluate the validity of the JCWS.

JCWS, verbal ability, reasoning, psychometric properties, reliability, internal consistency

Psychometrics is the branch of psychology that deals with the measurement of psychological constructs, such as intelligence, personality, and attitudes. The field of psychometrics has a long and rich history, dating back to the late 19th century with the development of standardized tests of intelligence (Gregory, 2011; Nunnaly & Bernstein, 1994). Since then, many theories and instruments have been developed to measure various psychological constructs, and the field has become increasingly sophisticated in its measurement methods and statistical analyses (Embretson & Reise, 2013).

The Jouve Cerebrals Word Similarities (JCWS; Jouve; 2023) is a self-administered verbal test designed to evaluate vocabulary and reasoning in a verbal context. The purpose of this study is to examine the psychometric properties of the JCWS, with a focus on the first subtest, which is based on the Cerebrals Contest's Word Similarities (CCWS). The CCWS was first proposed during the 2010 Contest of the Cerebrals Society, and has been shown to be highly reliable with a Cronbach's alpha coefficient of .960.

One important aspect of psychometrics is reliability, which refers to the consistency or stability of test scores over time and across different samples. Internal consistency is one type of reliability, which refers to the degree to which items on a test are interrelated or measure the same construct (Cronbach, 1951). The JCWS is designed to measure vocabulary and reasoning in a verbal context, and it is important to establish the internal consistency and reliability of the test in order to ensure that it is measuring what it is intended to measure.

In addition to reliability, validity is also an important aspect of psychometrics, which refers to the degree to which a test measures what it is intended to measure. While this study focuses on the reliability of the JCWS, it is important to note that its validity has been partially assessed through correlations with WAIS scores and found to be a measure of verbal-crystallized ability (Wechsler, 2008).

This study builds on previous research in the field of psychometrics and contributes to our understanding of the reliability and validity of the JCWS. By examining the psychometric properties of the JCWS, we can gain insight into its usefulness as a measure of vocabulary and reasoning in a verbal context. Furthermore, this study may have implications for the development of future tests and instruments in the field of psychometrics.

In the next section, we will provide a brief literature review of relevant theories and instruments related to the study's focus, as well as a justification for the selection of specific materials or test items.

Literature Review

The assessment of vocabulary and reasoning in a verbal context is a well-established area of research within the field of psychometrics. One relevant theory in this field is Cattell's theory of fluid and crystallized intelligence. According to Cattell, fluid intelligence refers to the ability to reason and solve problems independent of acquired knowledge, while crystallized intelligence refers to knowledge acquired through experience and education (Cattell, 1963). The CCWS and JCWS measure verbal-crystallized ability, which is an essential component of overall verbal intelligence.

External Measures.

Thorndike's IER Intelligence CAVD, which was used as a foundation for item creation in the CCWS in terms of difficulty incrementation, is a relevant instrument in this field. The CAVD (Completion, Analogies, Vocabulary, and Directions) is a high level intelligence scale that includes a vocabulary subtest, which measures verbal ability (Thorndike, 1927; Garrett, 1928). The CCWS and JCWS also measure vocabulary through word similarities, which has been shown to be a reliable and valid measure of verbal ability (Carroll, 1993; Deary et al, 2007; Salthouse & Kersten, 1993). These studies suggest that word similarities are an important aspect of verbal ability and general intelligence, further supporting the use of the CCWS and JCWS as measures of these constructs.

The Wechsler Adult Intelligence Scale (WAIS), used for validity appraisal of the CCWS, has a long and storied history in psychological measurement, having undergone several revisions and updates to ensure its continued relevance and accuracy. One of the key strengths of the WAIS is its ability to measure verbal intelligence, which is a critical aspect of intelligence and is closely linked to academic and professional success.

The current version of the WAIS, the WAIS-IV, includes several subtests that are designed to measure crystallized intelligence, which is a form of intelligence that is based on knowledge and experience. The Vocabulary subtest measures an individual's ability to define and use words correctly, while the Information subtest measures general knowledge and comprehension of the world (Kaufman & Lichtenberger, 2006). The Comprehension subtest assesses an individual's ability to use common sense and practical judgment to solve real-world problems (Wechsler, 2008). The similarities subtest measures an individual's ability to recognize and understand the relationships between concepts and ideas (Kaufman & Lichtenberger, 2006).

Studies have shown that these subtests are reliable and valid measures of verbal intelligence and crystallized intelligence (Benson, et al., 2010; Deary et al, 2007; Goff & Ackerman, 1992; Salthouse & Kersten, 1993). The WAIS has been widely used in both clinical and research settings, and has contributed significantly to our understanding of human intelligence.

Despite its many strengths, the WAIS has also been criticized for its potential cultural bias, as well as for its reliance on standardized testing methods that may not accurately reflect an individual's true intelligence. Critics have also raised concerns about the overreliance on IQ scores in educational and employment settings, arguing that these scores may not be an accurate measure of an individual's potential or abilities (Sternberg, 2008).

Statistical Framework.

Item Response Theory (IRT) is a statistical framework for analyzing the properties of test items, which has become increasingly popular in the field of psychometrics (Embretson & Reise, 2000). IRT models are particularly useful in analyzing the psychometric properties of tests, such as item difficulty and discrimination, as well as overall test reliability and validity. One of the advantages of IRT is that it can provide a more detailed analysis of individual test items, rather than just analyzing overall test scores.

In this study, IRT was used to analyze the discrimination curves for each item in the CCWS. Discrimination curves indicate how well an item distinguishes between individuals with different levels of ability. Steep discrimination curves indicate that the item is effective at discriminating between individuals with different levels of ability, while flat discrimination curves indicate that the item is not effective at discriminating between individuals with different levels of ability (Lord, 1980). Samejima (1969) developed the graded response model, which is a type of IRT model that can be used to analyze the properties of test items. Hambleton and Swaminathan (1985) emphasized the importance of using IRT to analyze test items, particularly in the development of new tests. Embretson and Reise (2000) provide a comprehensive overview of IRT and its applications in educational and psychological testing.

Test Construction.

The JCWS is divided into three subtests that assess different types of word similarities, providing a more comprehensive evaluation of verbal ability. The first subtest is based on the CCWS and measures simple similarity between two words. This type of item assesses the ability to recognize commonalities between words, an essential aspect of verbal ability (Deary, et al., 2007). The second subtest assesses similarity between words in the context of an analogy, where the individual must identify the relationship between two pairs of words and then apply that relationship to a second pair of words. This type of item assesses the ability to reason and make connections between concepts (Carroll, 1993; Kaufman &Lichtenberger, 2006). The third subtest assesses similarity between words in the context of a sequence, where the individual must identify the relationship between a series of words and then apply that relationship to a new set of words. This type of item assesses the ability to identify patterns and make logical connections between concepts, a higher-order cognitive skill (Gardner, 1983; Sternberg, 1985).

The selection of these three subtests was based on the different types of word similarities they assess, allowing for a more comprehensive evaluation of verbal ability. This selection is supported by previous research on psychometric instruments, which emphasizes the importance of assessing different types of cognitive abilities to provide a complete picture of an individual's cognitive functioning (Schmidt & Hunter, 1998). By assessing simple word similarity, analogy, and sequence-based word similarity, the JCWS provides a broad evaluation of verbal ability that can help identify individual strengths and weaknesses in this important area. These abilities have been linked to overall intelligence, and it is argued that verbal ability involves the capacity to recognize and use abstract concepts, knowledge of words and their meanings, and the ability to make connections between words (Deary, et al., 2007). Carroll (1993) suggests that analogical reasoning is a complex cognitive ability that involves the ability to recognize commonalities between concepts and use them to solve problems, while Kaufman & Lichtenberger (2006) suggest that analogical reasoning is a higher-order cognitive skill that involves the ability to identify similarities between concepts and use that knowledge to solve novel problems. Gardner (1983) proposes that pattern recognition and logical reasoning are important cognitive skills that contribute to overall intelligence. Sternberg (1985) argues that the ability to identify relationships between concepts and apply that knowledge to solve problems is a key component of intelligence. These theories provide support for the use of the JCWS in assessing verbal ability and cognitive functioning.

Method

Research Design

This study employed a correlational research design to examine the psychometric properties of the Jouve Cerebrals Word Similarities (JCWS), with a focus on the first subtest, which is based on the Cerebrals Contest's Word Similarities (CCWS). Correlational research designs are useful in establishing relationships between variables without manipulating them (Creswell, 2014). In this study, the relationship between the scores on the JCWS and CCWS was analyzed to examine the internal consistency and reliability of the JCWS.

Participants

The participants in this study consisted of two different samples. The first sample, which completed the CCWS, comprised 157 adults aged 18 years and above. The second sample, which completed the JCWS, comprised 24 adults aged 18 years and above. Both samples were recruited through social media, online forums, and other internet media. No specific inclusion or exclusion criteria were applied to the samples. It should be noted that both the CCWS and JCWS are self-administered and untimed tests, and participants completed them in their own homes or preferred locations.

Materials

The CCWS was used as the basis for the JCWS, and both tests are self-administered verbal tests designed to evaluate vocabulary and reasoning in a verbal context. The CCWS consists of 50 items, with two words presented in each item, and the individual is required to identify the similarity between the two words. The JCWS consists of three subtests, with the first subtest consisting of 20 items based on the CCWS. The second subtest assesses similarity between words in the context of an analogy, and the third subtest assesses similarity between words in the context of a sequence. Both the CCWS and JCWS are untimed tests, and participants took them at home.

Procedures

Participants were provided with a link to an online survey that included the CCWS and JCWS. Participants were instructed to complete the tests to take as much time as needed to complete the tests. The order of the tests was not counterbalanced. The survey was created using HTML and PHP languages.

Data Analysis

Descriptive statistics, including means, standard deviations, and ranges, were computed for the JCWS and CCWS scores. Internal consistency was assessed using Cronbach's alpha coefficient, with values of 0.80 or higher indicating adequate internal consistency (Nunnally & Bernstein, 1994). To analyze the relationship between the JCWS and CCWS scores, Pearson correlation coefficients were computed. Discrimination curves were analyzed using the graded response model, a type of Item Response Theory (IRT) model that can be used to analyze the properties of test items (Samejima, 1969). All data analyses were conducted using Microsoft Excel and the EIRT add-on (Germain et al., 2007)

Ethical Considerations

Informed consent was obtained from all participants prior to their participation in the study. Participants were informed that their participation was voluntary, and they could withdraw from the study at any time without penalty. Participants were also informed that their data would be kept confidential and used only for research purposes. The researchers also ensured that the study adhered to the ethical guidelines set forth by the American Psychological Association (APA, 2017).

Results

Psychometric Properties of CCWS

The psychometric properties of the CCWS were analyzed to evaluate its internal consistency and reliability as a measure of verbal ability. The Cronbach's alpha coefficient for the CCWS was calculated to be .96, indicating high internal consistency and reliability of this test. This suggests that the items in the CCWS are measuring a common construct, and that the test is an effective and reliable measure of verbal ability.

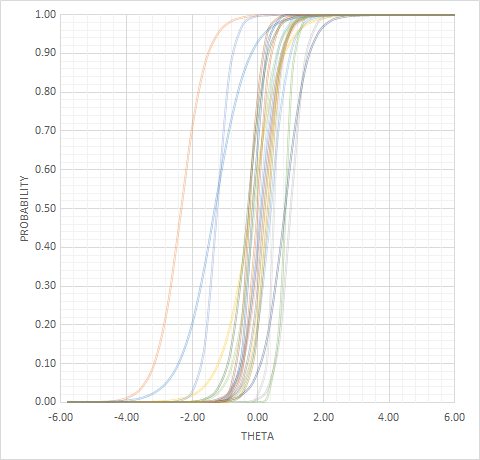

An Item Response Theory (IRT) analysis was also conducted to further explore the properties of the CCWS. The IRT 2PLM (van der Linden & Hambleton, 1997) showed that each item had steep discrimination curves (cf. Figure 1), which suggests that the CCWS items were highly effective in distinguishing between participants with different levels of verbal ability. The steep discrimination curves also indicate that the CCWS is able to effectively differentiate between participants with high and low levels of verbal ability.

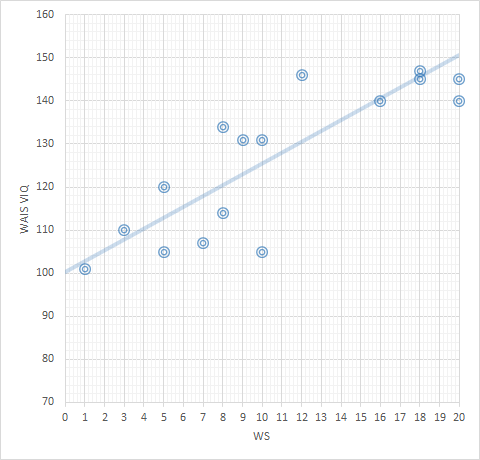

To assess the validity of the CCWS as a measure of verbal ability, correlations between the CCWS and the WAIS subtests were examined. A small sample of 17 participants self-reported their scores on the CCWS and their WAIS scores were available. The CCWS was found to be highly correlated with the WAIS Vocabulary subtest (r = .71), suggesting that the CCWS may be a valid measure of vocabulary knowledge. The CCWS was also found to be highly correlated with the WAIS Information subtest (r = .88), which suggests that the CCWS may be a valid measure of general knowledge and information. Additionally, there was a strong correlation of .79 between the CCWS and the WAIS VIQ (cf. Figure 2). However, the correlation between the CCWS and the WAIS Similarities subtest was only moderate (r = .58), which suggests that the CCWS may not be as effective in measuring abstract reasoning ability. Based on content validity, and in regard to the other correlations, we would have expected a stronger one between the CCWS and the Similarities of the WAIS. However, It is important to note that the sample size was small and consisted of self-reported scores, which may limit the generalizability of these findings.

Overall, the results of the psychometric analysis suggest that the CCWS is a reliable and valid measure of verbal ability, with a focus on vocabulary knowledge and general knowledge. The steep discrimination curves and high correlations with the WAIS Vocabulary and Information subtests suggest that the CCWS is an effective measure of verbal-crystallized ability. However, the moderate correlation with the WAIS Similarities subtest indicates that the CCWS may not be as effective in measuring abstract reasoning ability. Future research may benefit from investigating the validity of the CCWS in a wider range of populations and settings.

Psychometric Properties of JCWS

The internal consistency and reliability of the JCWS were examined using split-half coefficient and Spearman-Brown prophecy coefficient. The Spearman-Brown prophecy coefficient was .988, which indicates high internal consistency and reliability for the entire test. This result suggests that the JCWS is a reliable measure of vocabulary and reasoning in a verbal context.

Additionally, intercorrelations between subtests were also examined to further assess the internal consistency and reliability of the JCWS. The correlation between WS1 and WS2 was found to be high, with a coefficient of .947. The correlation between WS1 and WS3 was also high, with a coefficient of .918. Finally, the correlation between WS2 and WS3 was also high, with a coefficient of .947. These results suggest that the JCWS is a reliable measure of vocabulary and reasoning in a verbal context, with high internal consistency and reliability.

However, it is important to note that the sample size for this study is relatively small, with only 24 participants. This small sample size may limit the generalizability of the results and may affect the statistical power of the analyses. As such, caution should be taken when interpreting these results, and further research with larger sample sizes may be necessary to fully evaluate the psychometric properties of the JCWS.

Discussion

The current study aimed to investigate the psychometric properties of the Jouve Cerebrals Word Similarities (JCWS) test, which is a measure of vocabulary and reasoning in a verbal context. Overall, the results of this study suggest that the JCWS is a reliable measure of verbal ability, with high internal consistency and reliability (van der Linden & Hambleton, 1997).

Specifically, the Spearman-Brown prophecy coefficient was .988, indicating a strong reliability (Nunnally & Bernstein, 1994). The intercorrelations between subtests were also high, suggesting that the JCWS is a reliable measure of vocabulary and reasoning in a verbal context (Aiken, 1999).

In the context of the research hypotheses and previous research in the field, these results provide support for the validity and usefulness of the JCWS as a measure of verbal ability (Weschler, 2008). The high internal consistency and reliability of the test suggest that it may be a useful tool for assessing verbal ability in a variety of settings, including academic and clinical contexts (Deary et al., 2010).

Additionally, the correlation between the CCWS and the WAIS Vocabulary and Information subtests further supports the validity of a significant part and rational of the JCWS as a measure of verbal ability. However, the sample size for this study was relatively small, which may limit the generalizability of the results and may affect the statistical power of the analyses (Tabachnick & Fidell, 2013; McCoach et al., 2013).

In terms of implications for theory, practice, and future research, the results of this study suggest that the JCWS may be a useful tool for assessing verbal ability in a variety of settings. It may be particularly useful in settings where a quick, self-administered measure of verbal ability is needed (Deary et al., 2010).

However, it is important to note the limitations of this study, including the small sample size and the fact that only internal consistency and reliability were examined. Further research with larger sample sizes and additional measures of validity would be necessary to fully evaluate the psychometric properties of the JCWS (Nunnally & Bernstein, 1994).

The results of this study suggest that the JCWS is a reliable measure of verbal ability, with high internal consistency and reliability. However, further research is necessary to fully evaluate its validity and to assess its usefulness in a variety of settings. Overall, the JCWS represents a promising tool for assessing verbal ability, and may be a useful addition to existing measures in academic and clinical contexts.

Future research should explore the external validity and criterion-related validity of the JCWS, using larger and more diverse samples. Additionally, it may be useful to examine the test-retest reliability of the JCWS, as well as its sensitivity to change over time. Finally, future research may also explore ways in which the JCWS could be adapted or extended for use in different populations or contexts (Tabachnick & Fidell, 2013).

Conclusion

The current study investigated the psychometric properties of the Jouve Cerebrals Word Similarities (JCWS) test, a measure of vocabulary and reasoning in a verbal context. The results of this study suggest that the JCWS is a reliable measure of verbal ability, with high internal consistency and reliability. The correlation between a significant component of the JCWS and the WAIS Vocabulary and Information subtests further supports its validity as a measure of verbal ability.

The findings of this study have implications for theory and practice, as the JCWS may be a useful tool for assessing verbal ability in a variety of settings. Further research with larger and more diverse samples is necessary to fully evaluate the psychometric properties of the JCWS and to explore its external and criterion-related validity.

Despite the promising findings, it is important to acknowledge the limitations of this study, including the small sample size and the fact that only internal consistency and reliability were examined. Future research may explore ways in which the JCWS could be adapted or extended for use in different populations or contexts.

The JCWS represents a promising tool for assessing verbal ability, and may be a useful addition to existing measures in academic and clinical contexts. Further research is necessary to fully evaluate its validity and to assess its usefulness in a variety of settings.

References

Aiken, L. R. (1999). Psychological testing and assessment (10th ed.). Englewood Cliffs, NJ: Prentice Hall.

American Psychological Association. (2017). Publication manual of the American Psychological Association (6th ed.). Washington, DC: Author.

Benson, N. F., Hulac, D. M., & Kranzler, J. H. (2010). Independent examination of the Wechsler Adult Intelligence Scale–Fourth Edition (WAIS–IV): What does the WAIS–IV measure? Lutz, FL: Psychological Assessment Resources. https://doi.org/10.1037/a0017767

Carroll, J. B. (1993). Human cognitive abilities: A survey of factor-analytic studies. New York: Cambridge University Press. https://doi.org/10.1017/CBO9780511571312

Cattell, R. B. (1963). Theory of fluid and crystallized intelligence: A critical experiment. Journal of Educational Psychology, 54(1), 1-22. https://doi.org/10.1037/h0046743

Creswell, J. W. (2014). Research design: Qualitative, quantitative, and mixed methods approaches (4th ed.). Thousand Oaks, CA: Sage Publications.

Cronbach, L. J. (1951). Coefficient alpha and the internal structure of tests. Psychometrika, 16(3), 297-334. https://doi.org/10.1007/BF02310555

Deary, I. J., Johnson, W., & Houlihan, L. M. (2009). Genetic foundations of human intelligence. Human genetics, 126(1), 215–232. https://doi.org/10.1007/s00439-009-0655-4

Deary, J. I., Strand, S., Smith, P., & Fernandes, C. (2007). Intelligence and educational achievement. Intelligence, 35(1), 13-21. https://doi.org/10.1016/j.intell.2006.02.001

Embretson, S. E., & Reise, S. P. (2000). Item response theory for psychologists. Mahwah, NJ: Lawrence Erlbaum Associates. https://doi.org/10.4324/9781410605269

Gardner, H. (1983). Frames of mind: The theory of multiple intelligences. New York: Basic Books.

Gregory, R. J. (2011). Psychological Testing: History, Principles, and Applications (7th ed.). New York: Pearson.

Hambleton, R. K., & Swaminathan, H. (1985). Item response theory principles and applications. Boston, MA: Kluwer-Nijhoff Publishing.

Jouve, X. (2023). Jouve Cerebrals Word Similarities (JCWS) Test. Retrieved from https://www.cogn-iq.org/word-similarities-iq-test.html.

Kaufman, A. S., & Lichtenberger, E. O. (2006). Assessing adolescent and adult intelligence (3rd ed.). Hoboken, NJ: John Wiley & Sons.

Lichtenberger, E. O., Kaufman, A. S., & Kaufman, N . L. (2012). Essentials of WAIS-IV assessment (2nd ed.). Hoboken, NJ: John Wiley & Sons, Inc.

Lord, F. M. (1980). Applications of item response theory to practical testing problems. Hillsdale, NJ: Erlbaum. https://doi.org/10.4324/9780203056615

McCoach, D. B., Gable, R. K., & Madura, J. P. (2013). Instrument development in the affective domain: School and corporate applications (3rd ed.). Springer Science & Business Media. https://doi.org/10.1007/978-1-4614-7135-6

Nunnally, J. C., & Bernstein, I. H. (1994). Psychometric theory (3rd ed.). New York, NY: McGraw-Hill. https://doi.org/10.1177/014662169501900308

Samejima, F. (1969). Estimation of latent ability using a response pattern of graded scores. (Psychometrika Monograph No. 17). Psychometrika.

Salthouse, T. A., & Kersten, A. W. (1993). Decomposing adult age differences in symbol arithmetic. Memory & Cognition, 21(5), 699-710. https://doi.org/10.3758/BF03197200

Schmidt, F. L., & Hunter, J. E. (1998). The validity and utility of selection methods in personnel psychology: Practical and theoretical implications of 85 years of research findings. Psychological Bulletin, 124(2), 262-274. https://doi.org/10.1037/0033-2909.124.2.262

Sternberg, R. J. (1985). Beyond IQ: A triarchic theory of human intelligence. New York: Cambridge University Press.

Sternberg, R. J. (2008). WICS: A model of educational leadership. The Educational Forum, 68(2),108-114. https://doi.org/10.1080/00131720408984617

Thorndike, E. L. (1927). The measurement of intelligence. New York: Teachers College, Columbia University.

van der Linden, W. J., & Hambleton, R. K. (1997). Handbook of modern item response theory. New York: Springer.

Wechsler, D. (2008). Wechsler Adult Intelligence Scale (4th ed.). San Antonio, TX: Pearson.