Multidimensional Structure of Cognitive Abilities: Differentiating Literary and Scientific Tasks in JCCES and ACT Assessments

This study examines the multidimensional structure of cognitive abilities through the differentiation of literary and scientific tasks in the JCCES and ACT assessments. Employing multidimensional scaling (MDS) analysis with a sample of 60 participants, the study identifies two distinct dimensions: literary versus scientific tasks (Dimension 1) and JCCES versus ACT tasks (Dimension 2). These findings contribute to the understanding of the structure of cognitive abilities, highlighting the importance of considering domain-specific skills and contextual factors when interpreting test scores and designing educational interventions. Future research should further explore the multidimensional nature of cognitive abilities, investigate the differences between assessment tools, and examine underlying cognitive processes and neural mechanisms contributing to the observed distinctions.

cognitive abilities, multidimensional structure, literary tasks, scientific tasks, JCCES, ACT, assessment

The study of cognitive abilities has been a central focus of psychological research for more than a century, with numerous theories and models proposed to explain the underlying structure of intellectual functioning (Carroll, 1993; Cattell, 1971; Sternberg, 2003). One of the most enduring debates in the field of psychometrics concerns the extent to which cognitive abilities can be meaningfully differentiated into distinct components or are better understood as a single, unified construct (Deary, 2001; McGrew, 2009). To address this question, the present study employs multidimensional scaling (MDS) analysis to examine the structure of cognitive abilities, focusing on the differentiation between literary and scientific tasks, as well as the distinction between tasks from the Jouve Cerebrals Crystallized Educational Scale (JCCES; Jouve, 2010a) and the American College Testing (ACT, 2016) assessment. The current study builds on previous research that identified a single underlying factor accounting for the majority of the variance in the Jouve Cerebrals Crystallized Educational Scale (JCCES) and the American College Test (ACT) (Jouve, 2014a).

A key issue in the investigation of cognitive abilities is the identification of relevant theories and instruments for the study's focus. Among the most influential theories in the field of psychometrics are the Cattell-Horn-Carroll (CHC) theory of cognitive abilities (Cattell, 1987; Horn & Cattell, 1966) and Sternberg's triarchic theory of intelligence (Sternberg, 1985). The CHC theory proposes a hierarchical structure of cognitive abilities, with general intelligence (g) at the apex and more specific abilities, such as fluid and crystallized intelligence, forming the lower levels (McGrew, 2009). The triarchic theory, on the other hand, emphasizes the role of contextual factors in shaping intellectual functioning and posits that intelligence is composed of three interrelated aspects: analytical, creative, and practical (Sternberg, 2003).

Both the JCCES and the ACT have been widely used as measures of cognitive abilities in various populations, with the JCCES assessing a range of cognitive competencies (Jouve, 2010a) and the ACT serving as a standardized test for college admissions in the United States (ACT, 2016). The JCCES has been validated as a measure of cognitive abilities, while the ACT has demonstrated strong correlations with intelligence measures, suggesting that it also assesses cognitive functioning (Coyle & Pillow, 2008). However, differences between the JCCES and ACT in terms of their specific tasks and domains assessed raise questions about the extent to which these instruments capture distinct aspects of cognitive abilities.

The present study aims to provide a clearer understanding of the structure of cognitive abilities by examining the differentiation between literary and scientific tasks, as well as the distinction between JCCES and ACT tasks, using MDS analysis. Building on previous research on the multidimensionality of cognitive abilities (e.g., Carroll, 1993; Embretson, 1998), the study seeks to offer a more nuanced understanding of the relationships among various aspects of intellectual functioning and contribute to the ongoing debate regarding the nature of cognitive abilities.

Method

Research Design

The present study employed a correlational research design to explore the multidimensional structure of cognitive abilities. This design was selected because it allows for the examination of relationships among multiple variables without manipulating or controlling any variable (Creswell, 2014). The correlational design was deemed appropriate for investigating the structure of cognitive abilities, as it enabled the examination of relationships between performance on various tasks and the underlying dimensions that might differentiate them.

Participants

A total of 60 participants were recruited for this study, with their demographic characteristics collected, but not reported in this study. Participants were high school seniors or college students who had completed both the Jouve Cerebrals Crystallized Educational Scale (JCCES) and the American College Test (ACT). There were no exclusion criteria for this study.

Materials

Two assessments were utilized to measure abilities in this study: the Jouve Cerebrals Crystallized Educational Scale (JCCES) and the American College Test (ACT). The JCCES is a measure of crystallized intelligence, comprising tasks that assess verbal and literary abilities (Jouve, 2010a). The ACT is a standardized test designed to measure academic achievement in English, mathematics, reading, and science (ACT, 2016). Both assessments have demonstrated strong reliability and validity in previous research (Coyle & Pillow, 2008; Jouve, 2010b; Jouve, 2010c; Jouve, 2014a; Jouve, 2014b; Jouve, 2015).

Procedures

Data collection involved obtaining participants' scores on both the JCCES and ACT assessments. Participants were instructed to provide their most recent test scores from ACT upon completion of the JCCES online. Then, they were then entered into a secure database for analysis. Prior to data collection, informed consent was obtained from all participants, and they were assured of the confidentiality and anonymity of their responses.

Data Analysis

The data were analyzed using multidimensional scaling (MDS), a statistical technique that enables the visualization of relationships among variables in a reduced-dimensional space (Kruskal & Wish, 1978). MDS was chosen for its ability to reveal the underlying dimensions that differentiate cognitive tasks based on participants' performance patterns. Kruskal's stress (1) was used as the stress criterion, and an initial configuration was generated using the Torgerson method (Torgerson, 1958).

Results

Statistical Analyses

A multidimensional scaling (MDS) analysis was conducted on the dataset to test the research hypotheses. The MDS analysis was performed using XLSTAT, with a sample size of N = 60. The analysis employed the absolute model, Kruskal's stress (1) as the stress criterion, and included dimensions ranging from 1 to 7. The initial configuration was set to random, with 10 repetitions, convergence set to 0.00001, and a maximum of 500 iterations.

The dataset included scores on the Jouve Cerebrals Crystallized Educational Scale (JCCES) – Verbal Analogies (VA), Mathematical Problems (MP), and General Knowledge (GK) – and the American College Test (ACT) – English (ENG), Mathematics (MATH), Reading (READ), and Science (SCIE).

Results of the MDS Analysis

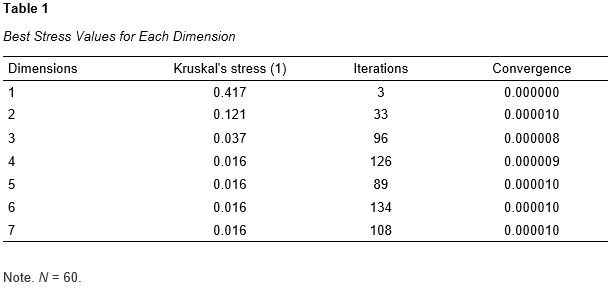

The MDS analysis resulted in the best stress values for each dimension (Table 1). The optimal solution was found in the two-dimensional representation space, with Kruskal's stress (1) at 0.121.

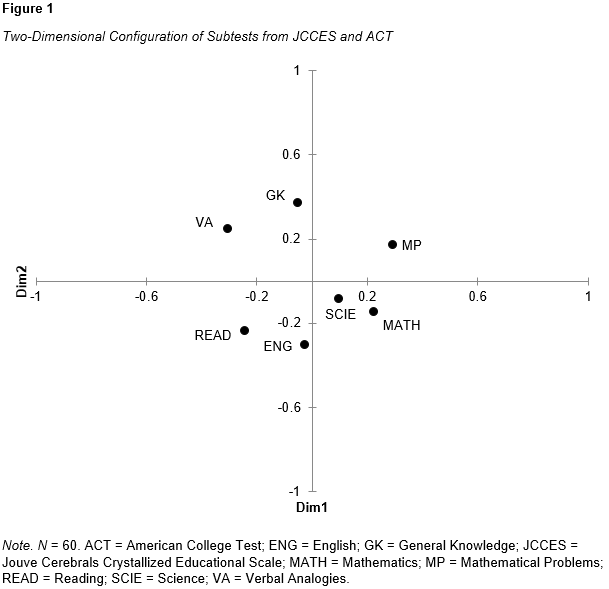

In the two-dimensional representation space, the configuration of the dimensions displayed negative values for literary tasks and positive values for scientific tasks along Dimension 1. Along Dimension 2, negative values represented ACT tasks, while positive values represented JCCES tasks (Figure 1).

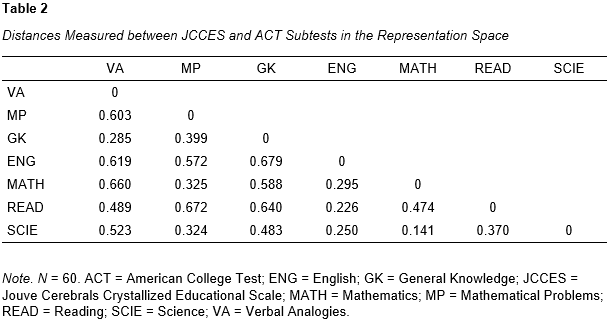

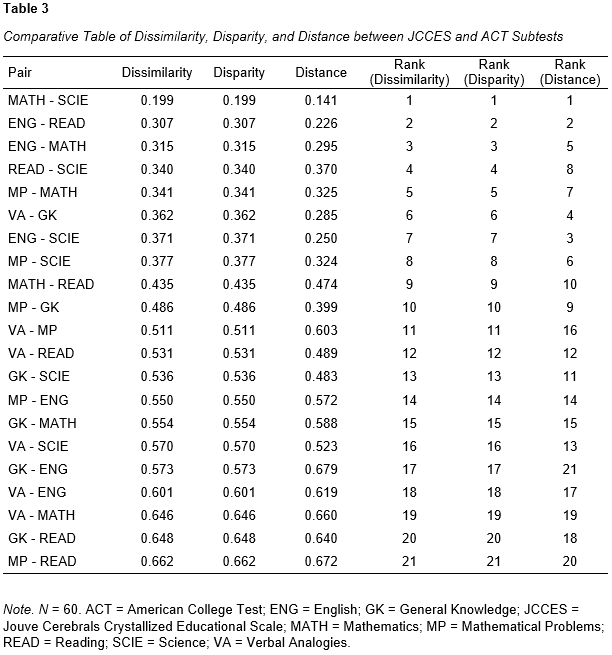

In this configuration, distances between the task scores were measured (Table 2), and the dissimilarity, disparity, and distance values were compared for each pair (Table 3). The Shepard diagram demonstrated a strong relationship between the dissimilarity and distance values, with an RSQ value of 0.90, indicating a good fit for the two-dimensional model.

Interpretation of Results and Hypothesis Testing

The MDS analysis results provided evidence that the two-dimensional representation space offered the most accurate configuration for the dataset. Dimension 1 differentiated between literary and scientific tasks, while Dimension 2 distinguished between ACT and JCCES tasks. This configuration supported the research hypotheses, suggesting that the tasks could be effectively differentiated along these two dimensions.

Dimension 1 (Dim1): Literary and Scientific Tasks.

This dimension was characterized by the negative values representing literary tasks and the positive values representing scientific tasks. The tasks were distributed along the dimensions as follows:

Literary tasks:

- Verbal Analogies (VA): -0.305

- General Knowledge (GK): -0.050

- English (ENG): -0.023

- Reading (READ): -0.240

Scientific tasks:

- Mathematical Problems (MP): 0.294

- Mathematics (MATH): 0.225

- Science (SCIE): 0.098

Dimension 2 (Dim2): JCCES and ACT Tasks.

This dimension was characterized by the positive values representing JCCES tasks and the negative values representing ACT tasks. The tasks were distributed along the dimensions as follows:

JCCES (Non-Scholastic) tasks:

- Verbal Analogies (VA): 0.245

- Mathematical Problems (MP): 0.169

- General Knowledge (GK): 0.371

ACT (Scholastic) tasks:

- English (ENG): -0.307

- Mathematics (MATH): -0.149

- Reading (READ): -0.240

- Science (SCIE): -0.089

The distances measured in the two-dimensional representation space showed that the tasks were effectively differentiated along these dimensions. For example, the distance between Mathematical Problems (MP) and Science (SCIE) was relatively small (0.325), indicating their similarity. In contrast, the distance between MP and Reading (READ) was larger (0.672), demonstrating their distinction.

In addition to the distances measured in the representation space, the comparative table provided further evidence supporting the two-dimensional configuration. The table displayed the dissimilarity, disparity, and distance values for each pair of tasks, along with their respective ranks. This information allowed for a more nuanced understanding of the relationships among the tasks in the two-dimensional space.

Limitations

Despite the significant findings, some limitations should be considered. The sample size (N = 60) may have limited the generalizability of the results. Furthermore, selection bias or methodological limitations, such as the choice of stress criterion or initial configuration settings, could have influenced the outcomes.

Discussion

Interpretation of Results and Relationship to Hypotheses and Previous Research

The finding that the tasks can be effectively differentiated along the literary versus scientific dimension is consistent with prior research, which has identified separate components of cognitive abilities, such as fluid and crystallized intelligence (Cattell, 1987; Horn & Cattell, 1966). Fluid intelligence, which encompasses reasoning and problem-solving abilities, is often associated with mathematical and scientific tasks, while crystallized intelligence, which includes knowledge and vocabulary, is typically linked to verbal and literary tasks. The present study extends this line of inquiry by providing further evidence for the distinction between literary and scientific tasks and highlighting the relevance of this differentiation for understanding individual differences in cognitive abilities.

The differentiation between JCCES and ACT tasks on Dimension 2 aligns with the notion that different assessments might measure specific aspects of cognitive abilities, even when assessing ostensibly similar constructs. This finding resonates with the work of Embretson (1998) and others, who have argued for the importance of considering the unique characteristics of various assessment tools and the potential impact of these differences on the interpretation of test scores. Additionally, the distinction between JCCES and ACT tasks supports the argument made by Sternberg (2003) that the measurement of cognitive abilities is context-dependent and that different assessments might capture different aspects of intellectual functioning depending on the specific tasks and domains they assess.

Existing literature has demonstrated significant correlations between the ACT and intelligence measures, indicating that performance on the ACT is closely related to general cognitive abilities (Coyle & Pillow, 2008). These findings suggest that the ACT serves as a valuable tool for assessing intellectual functioning in the context of academic achievement. However, despite the strong relationship between the ACT and intelligence measures, the present study sheds light on the fact that the ACT can be distinguished from the intelligence assessment provided by the JCCES. This distinction highlights the importance of considering the unique characteristics and purposes of different assessments when interpreting test scores and drawing conclusions about cognitive abilities.

In light of the present study's findings, Dimension 2 might be interpreted as a scholastic versus non-scholastic dimension, reflecting the distinct focus of the ACT as a scholastic assessment, in contrast to the more general cognitive assessment offered by the JCCES. This interpretation aligns with the work of scholars such as Messick (1989) and others, who have argued that scholastic assessments like the ACT are designed to measure not only general cognitive abilities but also specific knowledge, skills, and strategies that are relevant to academic success. In this sense, the differentiation between the JCCES and ACT tasks on Dimension 2 underscores the importance of recognizing the distinct purposes and underlying constructs assessed by different cognitive measures and highlights the need for a more nuanced understanding of the relationships among various aspects of intellectual functioning.

The present study builds upon the previous research, which found a single underlying factor accounting for the majority of the variance in both the JCCES and the ACT (Jouve, 2014a). This earlier study concluded that both assessments measured a common cognitive construct, which may be interpreted as general cognitive ability or intelligence. While these findings contribute valuable insights into the relationship between intelligence assessments and college admission tests, the present study extends this understanding by highlighting the multidimensional structure of cognitive abilities.

Our study demonstrates that, in addition to the shared cognitive construct identified in the previous research, the JCCES and ACT tasks can be effectively differentiated along two dimensions: literary versus scientific tasks (Dimension 1) and JCCES versus ACT tasks (Dimension 2). This finding suggests a more complex relationship between these assessments and their underlying constructs and implies that the assessments capture not only the general cognitive ability but also domain-specific skills and knowledge.

This multidimensional perspective on cognitive abilities aligns with the conclusions drawn from the previous research, emphasizing the importance of understanding the underlying factors that contribute to performance on intelligence and college admission assessments. The implications of these findings for theory and practice are significant, as they may inform the development of more effective testing methods and assessment strategies in the future.

The present study's findings also contribute to the broader literature on the structure of cognitive abilities by providing empirical support for a multidimensional model. This model aligns with the work of McGrew (2009) and others, who have proposed that cognitive abilities are best understood as a complex system of interrelated but distinct components, rather than a single, monolithic construct. The identification of separate dimensions for literary versus scientific tasks and JCCES versus ACT tasks adds to this growing body of research and highlights the need for a more nuanced understanding of the interplay among various cognitive abilities.

Implications for Theory, Practice, and Future Research

The results of this study contribute to our understanding of the structure of cognitive abilities and have several practical implications. First, the differentiation between literary and scientific tasks on Dimension 1 can inform the design of targeted interventions and educational curricula, as well as the selection of appropriate assessment tools for different populations. For example, educators and clinicians could use this information to guide the development of instructional materials that emphasize the specific cognitive skills most relevant to a given domain.

Second, the differentiation between JCCES and ACT tasks on Dimension 2 highlights the importance of considering the context in which cognitive assessments are administered, as well as the specific tasks being measured. This finding suggests that practitioners should be cautious when comparing scores across different assessments or interpreting test results in isolation. Instead, a more comprehensive approach, incorporating multiple measures and assessment tools, may be necessary to accurately assess an individual's cognitive abilities.

Future research should continue to explore the multidimensional structure of cognitive abilities, with particular attention to the potential differences between various assessment tools and the influence of contextual factors on test performance. Additionally, researchers should consider examining the underlying cognitive processes and neural mechanisms that contribute to the observed distinctions between literary and scientific tasks, as well as between JCCES and ACT tasks. Such investigations could provide valuable insights into the nature of cognitive abilities and inform the development of more effective interventions and assessment strategies.

Limitations and Alternative Explanations

There are several limitations to the present study that warrant consideration. First, the sample size (N = 60) may have limited the generalizability of the results, and future studies should include larger, more diverse samples to increase the robustness of the findings. Second, the use of Kruskal's stress (1) as the stress criterion and the specific settings for the initial configuration may have influenced the outcomes of the MDS analysis. Researchers should explore alternative stress criteria and configuration settings in future studies to determine whether the observed results are consistent across different analytic approaches.

Furthermore, it is possible that other factors, such as participants' cultural background, educational experiences, or motivational levels, could have contributed to the observed differentiation between literary and scientific tasks, as well as between JCCES and ACT tasks. Future research should examine these potential confounds and investigate the extent to which they may account for the observed patterns in the data.

Directions for Future Research

The present study provided evidence for the multidimensional structure of cognitive abilities, with tasks effectively differentiated along two dimensions: literary versus scientific tasks and JCCES versus ACT tasks. These findings have important implications for the interpretation of test scores, the design of educational interventions, and the selection of appropriate assessment tools. The differentiation between literary and scientific tasks can inform targeted instructional strategies and support the development of curricula that cater to the specific cognitive skills required within each domain. The distinction between JCCES and ACT tasks highlights the importance of considering the context in which cognitive assessments are administered and the potential differences between various assessment tools.

Future research should continue to explore the multidimensional nature of cognitive abilities and investigate the potential differences between assessment tools, as well as the influence of contextual factors on test performance. Additionally, researchers should examine the underlying cognitive processes and neural mechanisms that contribute to the observed distinctions between literary and scientific tasks, as well as between JCCES and ACT tasks. This line of inquiry could provide valuable insights into the nature of cognitive abilities and inform the development of more effective interventions and assessment strategies.

Moreover, future studies should address the limitations of the present study by employing larger, more diverse samples to increase the robustness and generalizability of the findings. Alternative stress criteria and configuration settings in MDS analysis should be explored to assess the consistency of the results across different analytic approaches. Furthermore, researchers should consider examining potential confounding factors, such as participants' cultural background, educational experiences, or motivational levels, to better understand the extent to which they may account for the observed patterns in the data.

In sum, the present study contributes to our understanding of the structure of cognitive abilities and underscores the importance of considering the multidimensional nature of intellectual functioning in both research and practice. By building on these findings and addressing the limitations of the current study, future research can continue to advance our knowledge of cognitive abilities and inform the development of more effective educational interventions and assessment strategies.

Conclusion

The present study demonstrated the multidimensional structure of cognitive abilities, differentiating tasks along two dimensions: literary versus scientific tasks and JCCES versus ACT tasks. These findings offer valuable insights for interpreting test scores, designing educational interventions, and selecting suitable assessment tools. The differentiation between literary and scientific tasks can guide targeted instructional strategies and inform the development of curricula tailored to the specific cognitive skills required in each domain. The distinction between JCCES and ACT tasks emphasizes the importance of considering the context of cognitive assessments and the potential differences between various assessment tools.

Implications for theory and practice include informing the development of more effective testing methods and assessment strategies. However, the study's limitations, such as sample size and potential confounding factors, should be addressed in future research. Exploring alternative stress criteria and configuration settings in MDS analysis, as well as examining the underlying cognitive processes and neural mechanisms, could provide valuable insights into the nature of cognitive abilities and inform the development of more effective interventions and assessment strategies.

In conclusion, this study contributes to our understanding of the structure of cognitive abilities and highlights the importance of considering the multidimensional nature of intellectual functioning in both research and practice. Future research can build on these findings to advance our knowledge of cognitive abilities and inform the development of more effective educational interventions and assessment strategies.

References

ACT (2016). American College Test. Retrieved from https://www.act.org/

Carroll, J. B. (1993). Human cognitive abilities: A survey of factor-analytic studies. New York: Cambridge University Press. https://doi.org/10.1017/CBO9780511571312

Cattell, R. B. (1971). Abilities: Their structure, growth, and action. Boston, MA: Houghton Mifflin.

Cattell, R. B. (1987). Intelligence: Its Structure, Growth and Action. New York: North-Holland.

Coyle, T. R., & Pillow, D. R. (2008). SAT and ACT predict college GPA after removing g. Intelligence, 36(6), 719–729. https://doi.org/10.1016/j.intell.2008.05.001

Creswell, J. W. (2014). Research Design: Qualitative, Quantitative and Mixed Methods Approaches (4th ed.). Thousand Oaks, CA: Sage.

Deary, I. J. (2001). Intelligence: A very short introduction. Oxford, UK: Oxford University Press.

Embretson, S. E. (1998). A cognitive design system approach to generating valid tests: Application to abstract reasoning. Psychological Methods, 3(3), 380–396. https://doi.org/10.1037/1082-989X.3.3.380

Horn, J. L., & Cattell, R. B. (1966). Refinement and test of the theory of fluid and crystallized general intelligences. Journal of Educational Psychology, 57(5), 253-270. https://doi.org/10.1037/h0023816

Jouve, X. (2010a). Jouve Cerebrals Crystallized Educational Scale. Retrieved from http://www.cogn-iq.org/tests/jouve-cerebrals-crystallized-educational-scale-jcces

Jouve, X. (2010b). Investigating the Relationship Between JCCES and RIAS Verbal Scale: A Principal Component Analysis Approach. Retrieved from https://cogniqblog.blogspot.com/2010/02/on-relationship-between-jcces-and.html

Jouve, X. (2010c). Relationship between Jouve Cerebrals Crystallized Educational Scale (JCCES) Crystallized Educational Index (CEI) and Cognitive and Academic Measures. Retrieved from https://cogniqblog.blogspot.com/2010/02/correlations-between-jcces-and-other.html

Jouve, X. (2014a). Exploring the Relationship between JCCES and ACT Assessments: A Factor Analysis Approach. Retrieved from https://cogniqblog.blogspot.com/2014/10/exploring-relationship-between-jcces.html

Jouve, X. (2014b). Differentiating Cognitive Abilities: A Factor Analysis of JCCES and GAMA Subtests. Retrieved from https://cogniqblog.blogspot.com/2014/10/differentiating-cognitive-abilities.html

Jouve, X. (2015). Exploring the Underlying Dimensions of Cognitive Abilities: A Multidimensional Scaling Analysis of JCCES and GAMA Subtests. Retrieved from https://cogniqblog.blogspot.com/2014/12/exploring-underlying-dimensions-of.html

Kruskal, J. B., & Wish, M. (1978). Multidimensional scaling. Sage University Paper Series on Quantitative Applications in the Social Sciences, No. 07-011. Thousand Oaks, CA: Sage Publications. http://dx.doi.org/10.4135/9781412985130

McGrew, K. S. (2009). CHC theory and the human cognitive abilities project: Standing on the shoulders of the giants of psychometric intelligence research. Intelligence, 37(1), 1-10. https://doi.org/10.1016/j.intell.2008.08.004

Messick, S. (1989). Validity. In R. L. Linn (Ed.), Educational measurement (pp. 13–103). Macmillan Publishing Co, Inc; American Council on Education.

Sternberg, R. J. (1985). Beyond IQ: A triarchic theory of human intelligence. New York: Cambridge University Press.

Sternberg, R. J. (2003). Wisdom, intelligence, and creativity synthesized. New York: Cambridge University Press. https://doi.org/10.1017/CBO9780511509612

Torgerson, W. S. (1958). Theory and Methods of Scaling. New York: John Wiley.