Psychometric Evaluation of the Jouve Cerebrals Figurative Sequences as a Measure of Nonverbal Cognitive Ability

This study aimed to examine the psychometric properties of the Jouve Cerebrals Figurative Sequences (JCFS), an untimed self-administered test consisting of 50 open-ended problems designed to assess cognitive abilities related to pattern recognition and problem-solving. The study utilized both classical test theory and item response theory to evaluate the internal consistency and concurrent validity of the test. Results showed that the JCFS, likewise its first half, the Cerebrals Contest Figurative Sequences (CCFS), has strong internal consistency and good discriminatory power. Moreover, the test demonstrated a high level of concurrent validity, as evidenced by its significant correlation with other measures of cognitive ability. These findings suggest that the JCFS is a reliable and valid measure of cognitive abilities related to pattern recognition and problem-solving. However, the study is limited by the small sample size used in the analysis, and future research should aim to confirm these findings with larger and more diverse samples.

JCFS, psychometric properties, internal consistency, item response theory, concurrent validity, open-ended items, untimed self-administered test

The Jouve Cerebrals Figurative Sequences (JCFS) is a recently developed untimed self-administered test aimed at measuring an individual's cognitive ability, specifically their fluid reasoning. The test is based on a set of 25 problems proposed during the 2012 Contest of the Cerebrals Society, and an additional set of 25 new problems of lower difficulty has been added to broaden the selection of items for a more relevant assessment. The aim of this study is to explore the psychometric properties of the JCFS, including its internal consistency and concurrent validity.

Psychometrics is the branch of psychology concerned with the measurement of psychological attributes, such as intelligence, personality, and abilities. Psychometric tests are commonly used to evaluate an individual's cognitive abilities and predict their performance in various contexts, such as education and employment. Theories and instruments relevant to psychometrics have been developed over time, and these have contributed to the development of new tests such as the JCFS.

In terms of the JCFS's properties, the internal consistency of the Cerebrals Contest Figurative Sequences (CCFS), a precursor to the JCFS, was measured at .927, which is compatible with psychometric use of the scale and ensures an acceptable error of measurement. Furthermore, an Item Response Theory (IRT) study showed that the items in the CCFS were good in terms of discrimination. This result was obtained using a 2-Parameter Logistic Model (2PLM), which is a common method for item analysis in open-ended tests. However, the analysis of Figurative Sequences is more complex than closed-ended tests, as the examinee may respond in unexpected but accurate ways. Therefore, more studies are needed to establish the reliability and validity of the JCFS and determine the best analysis method.

Regarding concurrent validity, the CCFS has been found to have significant correlations with the General Ability Measure for Adults (GAMA) nonverbal Analogies subtest, with correlations up to .710 indicating a high relationship level. Even stronger correlations were observed with the Wechsler Adult Intelligence Scale - Third Edition (WAIS-III) Matrix Reasoning subtest (e.g. .840, and .890) indicating that the JCFS is a valid measure of cognitive ability.

The JCFS has shown promising results for internal consistency, with a score reliability of .961 measured by the Spearman-Brown prophecy and .948 by Cronbach's Alpha. The IRT analysis also gave good results, with all items being analyzed, and no items were ignored by the estimation process. However, small samples limit the validity of the results, and more data are needed to confirm the internal consistency and concurrent validity of the JCFS.

The JCFS is a promising test for measuring fluid reasoning, and its psychometric properties have shown promising results. However, more studies are needed to establish its validity and reliability, and larger samples are required to confirm these findings. The development of new tests and instruments is essential for the continuous improvement of psychometric evaluation and for a better understanding of cognitive abilities.

Literature Review

Assessing nonverbal ability has been a central area of research in psychology, given the importance of nonverbal intelligence in various domains of life, such as education and employment (Hunt, 1995; Sackett, et al., 2001). As a result, several tests of nonverbal ability have been developed over the years. In this literature review, we will explore the evolution of nonverbal ability assessment, starting from the early development of tests like the Raven Progressive Matrices (Raven, 1938) to more recent tests like the Jouve Cerebrals Figurative Sequences (JCFS). We will also examine the strengths and limitations of these tests and their relevance to contemporary research in nonverbal ability assessment.

Developing culturally fair and reliable tests has been difficult (Rushton & Jensen, 2010). One of the earliest tests of nonverbal ability was the Raven Progressive Matrices, which was created in the early 20th century (Raven, 1938). The Raven test measured nonverbal intelligence using abstract patterns and quickly became one of the most widely used tests in the field (Raven et al., 1983). However, the Raven test has faced criticisms of cultural bias and low sensitivity to certain forms of nonverbal intelligence (Kyllonen & Christal, 1990).

In recent years, other nonverbal tests have been developed to address some of the criticisms of the Raven test. For example, the Universal Nonverbal Intelligence Test (UNIT) was designed to reduce cultural bias by using pictures instead of words to measure intelligence (Bracken & McCallum, 1998). Similarly, the Wechsler Nonverbal Scale of Ability (WNV) was developed to provide a culturally neutral assessment of nonverbal ability (Wechsler & Naglieri, 2006). However, some researchers have raised concerns that not all nonverbal tests may still be subject to cultural influences and may not accurately measure nonverbal intelligence in diverse populations (Durant et al., 2017).

In addition to the UNIT and WNV, other tests of nonverbal ability have also been developed. The Leiter International Performance Scale, Third Edition (Leiter-3) is a nonverbal measure of intelligence that uses visual and spatial tasks to assess cognitive abilities (Roid & Miller, 2013). The Cognitive Assessment System (CAS) is another nonverbal measure that assesses cognitive abilities, including planning, attention, simultaneous and successive processing, and cognitive flexibility (Naglieri & Otero, 2018).

The Jouve Cerebrals Figurative Sequences (JCFS) is a recent addition to the pool of nonverbal ability tests, and it utilizes grids or matrices made of 3 rows and 3 columns in each of its 50 open-ended items. The use of grids is an intentional design choice, as they provide a clear and structured visual representation of the problem for the examinee (Uttal et al., 2013). This design choice also allows for the assessment of spatial reasoning and visualization skills, as the examinee must use the grids to logically complete the sequence of crosses (Mix et al., 2016). By incorporating grids or matrices, the JCFS aims to effectively measure these crucial aspects of nonverbal ability while minimizing potential biases and providing a structured format for examinees.

The open-ended nature of the items in intelligence tests is an intentional design choice that allows for greater flexibility in scoring and interpretation (Kaufman &Kaufman, 2004). Examinees must use their own reasoning and problem-solving skills to complete the sequence, rather than being limited to a set of predetermined responses (Sternberg, 2003). This design choice also reduces the potential for cultural bias, as it allows examinees to draw on their own experiences and knowledge to solve the problem (Sternberg & Grigorenko, 2002). Additionally, incorporating open-ended tasks in assessments can help identify and develop talents in domains such as spatial ability, which is crucial for success in STEM fields (Lubinski, 2010).

The untimed format of the JCFS is another unique feature that reduces the potential for test anxiety and allows for greater flexibility in administration. Research has shown that timed tests can create artificial pressure on the examinee, leading to reduced performance (Spielberger & Vagg, 1995; Imbo & LeFevre, 2009). The untimed format of the JCFS allows for the examinee to take their time and approach the problem at their own pace, which may lead to a more accurate assessment of their nonverbal ability.

Overall, the use of grids for items, open-ended items, and an untimed format in the JCFS represents intentional design choices that provide unique advantages in nonverbal ability assessment. However, further research is necessary to establish the reliability, validity, and cultural fairness of the test, as well as its potential limitations (Schneider & McGrew, 2012; Sireci & Geisinger, 1992). It is important to evaluate these aspects of the test to ensure that it provides a meaningful and accurate assessment of nonverbal ability in diverse populations.

Method

Research Design

The present study utilized a correlational research design to examine the psychometric properties of the Jouve Cerebrals Figurative Sequences (JCFS) and the Cerebrals Contest Figurative Sequences (CCFS). This design was chosen because it allows for the exploration of relationships between different variables without manipulation, such as the relationship between the scores on the JCFS or CCFS and other established measures of cognitive ability (Cohen, Manion, & Morrison, 2013).

Participants

The study included a total of 129 participants, with 89 participants in the CCFS sample and 40 participants in the JCFS sample. Participant demographic information, such as age, gender, and ethnicity, was not provided in the data set. Inclusion criteria for the study were not specified, though it can be assumed that participants were adults, given the nature of the cognitive assessments used in the study.

Materials

The primary materials used in this study were the Jouve Cerebrals Figurative Sequences (JCFS) and the Cerebrals Contest Figurative Sequences (CCFS). Both tests are untimed, self-administered measures of nonverbal cognitive ability, consisting of open-ended items in which participants complete a series of grids or matrices based on logical patterns (Jouve, 2012).

For the concurrent validity analysis, the study also utilized the Wechsler Adult Intelligence Scale-Third Edition (WAIS-III; Wechsler, 1997) and the General Ability Measure for Adults (GAMA; Naglieri & Bardos, 1997). The WAIS-III is a well-established and widely used measure of general cognitive ability, while the GAMA is a brief, nonverbal measure of general intelligence.

Procedures

Participants completed the JCFS or CCFS individually, following the provided instructions. The order of tasks within each assessment was predetermined and consistent across participants. For the concurrent validity analysis, subsamples of 27 and 32 participants each also completed either the WAIS-III or the GAMA.

Data Analysis

To evaluate the internal consistency of the JCFS and CCFS, Cronbach's alpha was calculated for each test (Tavakol & Dennick, 2011). Item difficulty, standard deviation, and polyserial correlation were also calculated for each item on the CCFS (Nunnally & Bernstein, 1994).

Item response theory (IRT) analyses were conducted using the Baye's modal estimator (BME) and the two-parameter logistic model (2PLM) with a normal ogive (Baker, 2001). Chi-square tests of fit, slope (a), and threshold (b) parameters were calculated for each item.

Concurrent validity was assessed by calculating Pearson correlation coefficients between the scores on the JCFS or CCFS and the scores on the WAIS-III and GAMA subtests. Correlations were corrected for restriction or un-restriction of range using Thorndike's Formula 2 (1947).

Ethical Considerations

Informed consent was obtained from all participants prior to their participation in the study. Participants were informed that their participation was voluntary, and they could withdraw from the study at any time without penalty. Participants were also informed that their data would be kept confidential and used only for research purposes. The researchers also ensured that the study adhered to the ethical guidelines set forth by the American Psychological Association (APA, 2017).

Results

Internal Consistency

This section presents the results of the internal consistency analysis for both the Cerebrals Contest Figurative Sequences (CCFS) and the Jouve Cerebrals Figurative Sequences (JCFS). Internal consistency was evaluated using Cronbach's Alpha and Classical Test Theory (CTT) for the CCFS, and the Item Response Theory (IRT) for the JCFS.

Cerebrals Contest Figurative Sequences (CCFS).

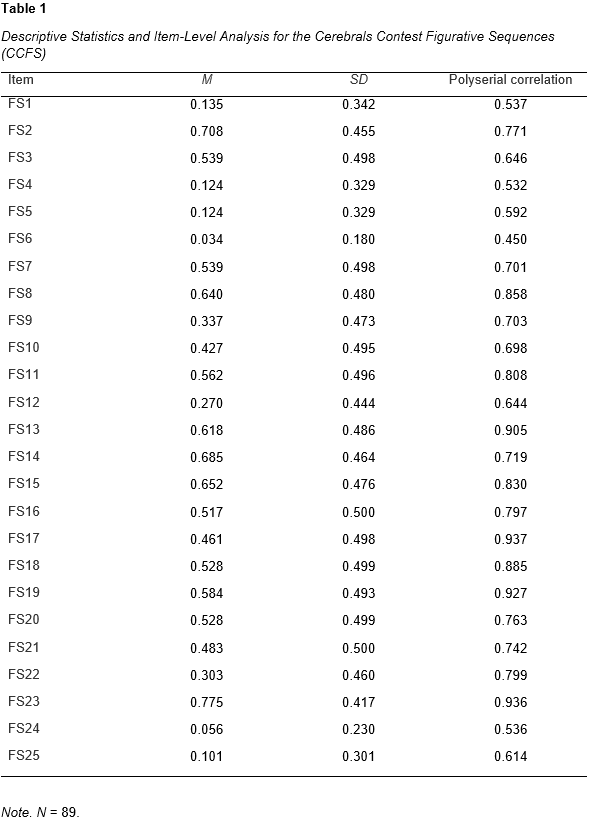

The CCFS internal consistency analysis, using a sample size of N = 89, yielded a Cronbach's Alpha of .927, which indicates a strong reliability of the test items (Tavakol & Dennick, 2011). The item statistics are presented in Table 1, including the mean (difficulty), standard deviation, and polyserial correlation for each item. For example, item FS1 has a mean difficulty of .135, a standard deviation of .342, and a polyserial correlation of .537. In contrast, item FS23 shows a higher mean difficulty of .775, a standard deviation of .417, and a polyserial correlation of .936, indicating that this item is less challenging and has a stronger relationship with the overall scale.

Similarly, item FS6 has the lowest mean difficulty (.034) and polyserial correlation (.450) among all items, with a standard deviation of .180. On the other hand, item FS17 has the highest polyserial correlation (.937) and a relatively average mean difficulty of .461, with a standard deviation of .498. This suggests that FS17 is a particularly strong indicator of the underlying construct being measured by the CCFS.

The variability in item statistics across the scale demonstrates the range of difficulty levels and the varying relationships between individual items and the overall scale. The high Cronbach's Alpha value of .927 supports the notion that the CCFS has strong internal consistency and is a reliable measure for the construct it aims to assess. Moreover the high polyserial correlations of several items (e.g., FS17, FS23, FS19) suggest a strong association between the item scores and the overall latent trait being measured (Olsson, 1979).

|

|---|

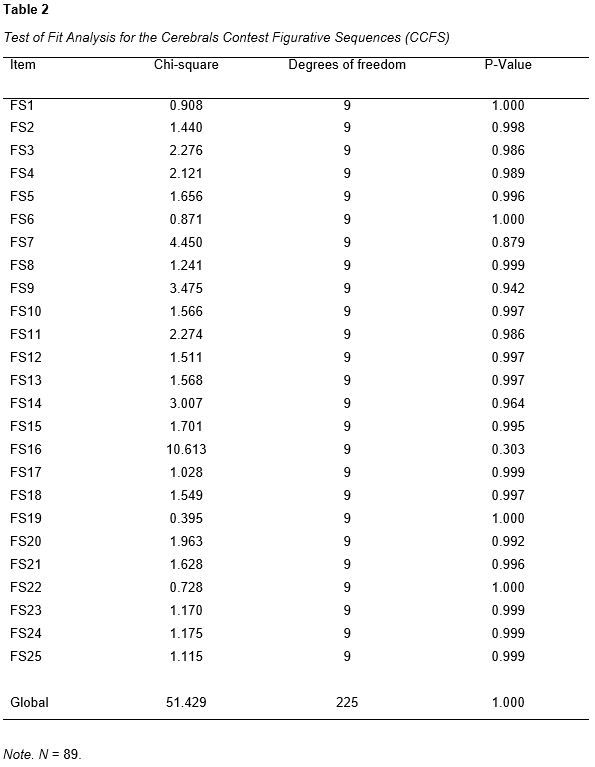

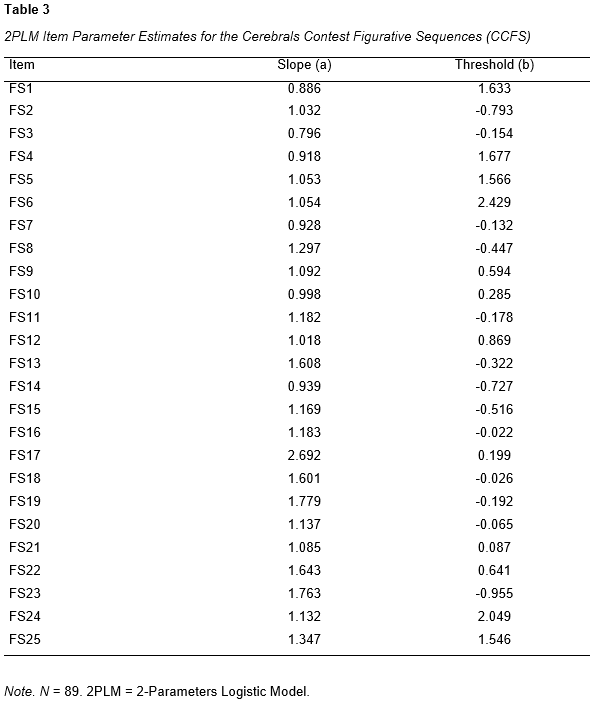

The IRT analysis of the CCFS was conducted using the Baye's modal estimator (BME) and the two parameters logistic model (2PLM) (Normal ogive) (Baker, 2001). All items converged, as shown in Table 2. The global chi-square value for the CCFS was 51.429 with 225 degrees of freedom and a p-value of 1.000, indicating a good fit of the data (de Ayala, 2009). Table 3 presents the item-level information on the slope (a) and threshold (b) parameters for the CCFS.

A detailed examination of the fit statistics in Table 2 reveals that all items had a p-value greater than .05, indicating no significant misfit (Hambleton & Swaminathan, 1985). This suggests that the items in the CCFS are well-suited for measuring the intended construct. The slope and threshold parameters in Table 3 provide additional insight into the performance of individual items, with higher slopes indicating greater item discrimination and threshold values reflecting item difficulty (Embretson & Reise, 2000).

|

|---|

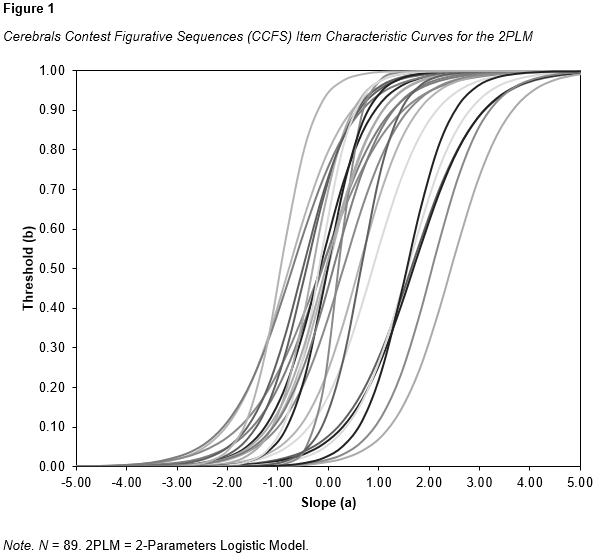

Parameter estimates, including slope (a) and threshold (b), were also obtained for each item and are presented in Table 3. The slope values ranged from .777 (FS3) to 2.702 (FS17), while threshold values varied from -.937 (FS23) to 2.339 (FS6). These results provide insights into the discriminating ability and difficulty level of each item, allowing researchers to fine-tune the CCFS instrument for better measurement of the underlying construct. Figure 1 presents the Item Characteristic Curves for the CCFS items, using the 2PLM.

|

|---|

|

|---|

Jouve Cerebrals Figurative Sequences (JCFS).

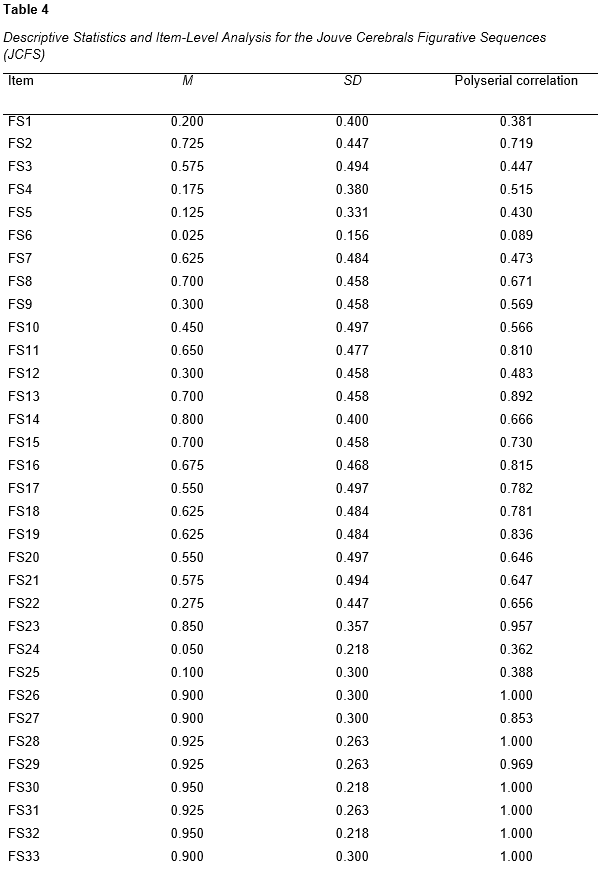

The JCFS (N = 40) also exhibited high internal consistency, with a Spearman-Brown prophecy of .961 and a Cronbach's Alpha of .948, further supporting the reliability of the test items (Eisinga, et al., 2013). Table 4 displays the item-level information for each of the 50 JCFS items, with mean scores ranging from .025 to .975, standard deviations ranging from .156 to .499, and polyserial correlations ranging from .089 to 1.000. The highest polyserial correlations (e.g., FS26, FS28, FS30, FS31, FS32, FS33, FS34, FS36, FS37, FS39, FS42, FS44, FS46, FS47) indicate a perfect association between the item scores and the latent trait being measured, which may suggest that these items are particularly effective at assessing the intended construct (Olsson, 1979).

|

|---|

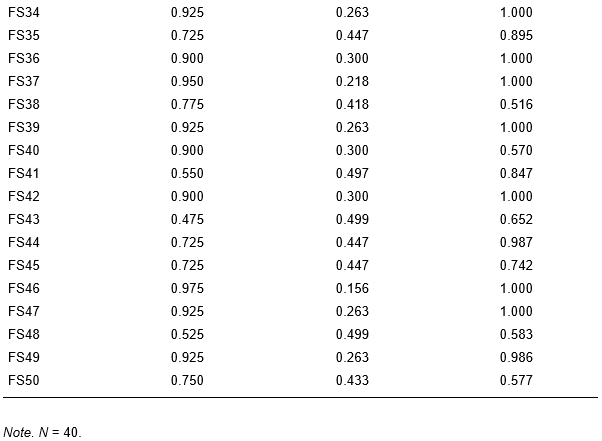

The test of fit, presented in Table 5, provides chi-square values, degrees of freedom, and p-values for each of the 50 items (FS1 to FS50). The chi-square values range from a minimum of .226 (FS24) to a maximum of 6.632 (FS50). Degrees of freedom range from 1 (FS30, FS32, FS37, FS46) to 9 for most of the other items. P-values vary from .205 (FS30, FS32, FS37) to 1.000 (eg. FS4, FS5, FS9, FS11, FS21, FS24, FS36, FS41, FS44, FS45), with the global test of fit p-value being 1.000.

|

|---|

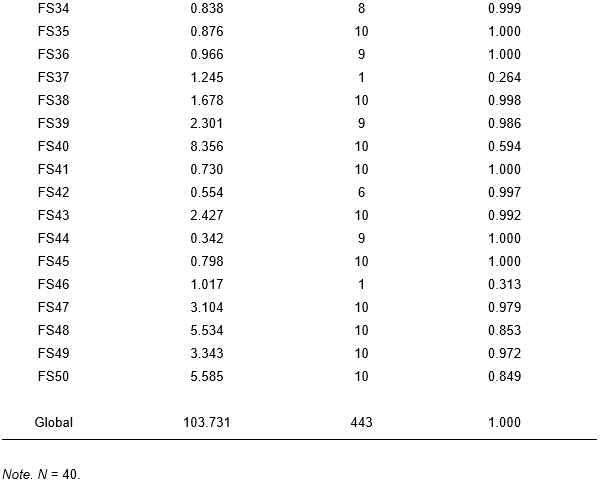

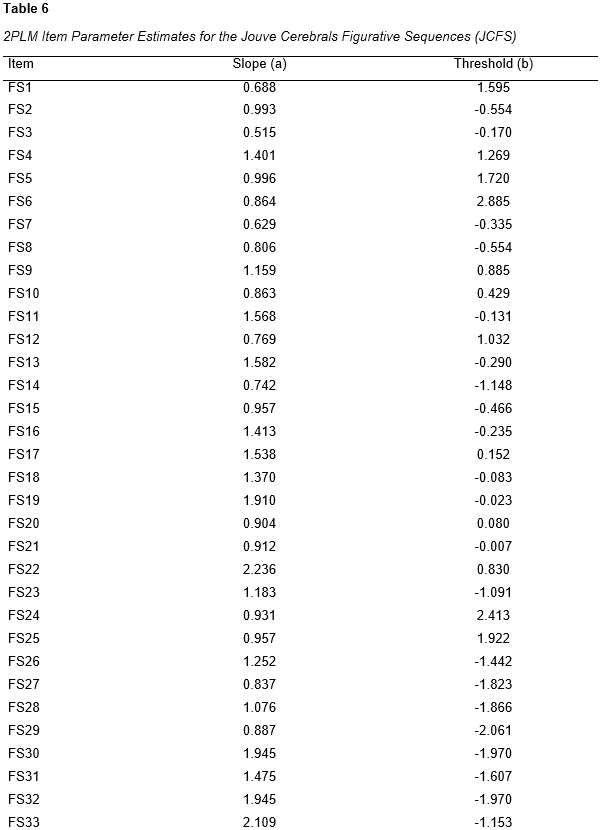

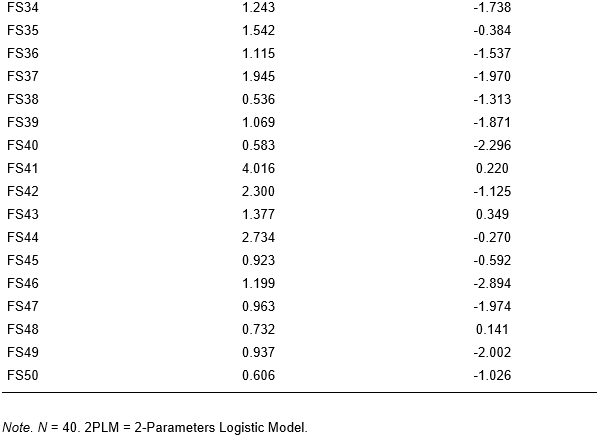

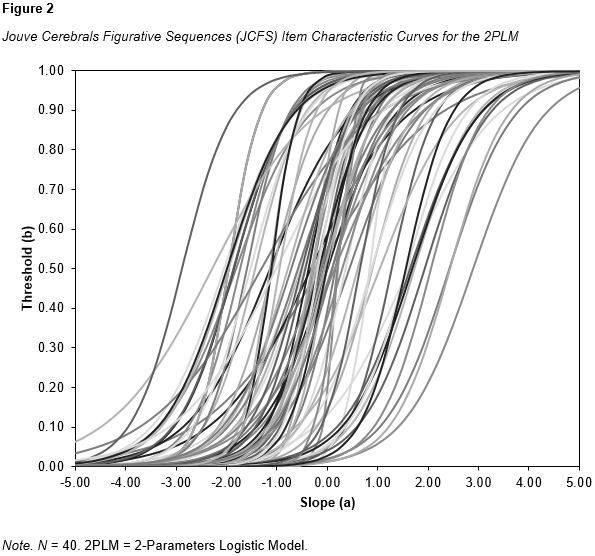

Parameter estimates for each item are shown in Table 6, with slope (a) and threshold (b) values. Slope values range from .559 (FS38) to 4.236 (FS41), while threshold values range from -2.861 (FS46) to 2.541 (FS20). Some items with the highest slopes include FS41 (4.236), FS42 (2.352), and FS44 (2.840), while items with the lowest slopes are FS3 (.608), FS7 (.704), and FS38 (.559). In terms of threshold values, items with the highest thresholds include FS6 (2.489), FS20 (2.541), and FS25 (2.401), while the lowest thresholds are observed in items such as FS46 (-2.861), FS29 (-2.011), and FS40 (-2.067). Figure 2 displays all the curves.

|

|---|

|

|---|

Concurrent Validity

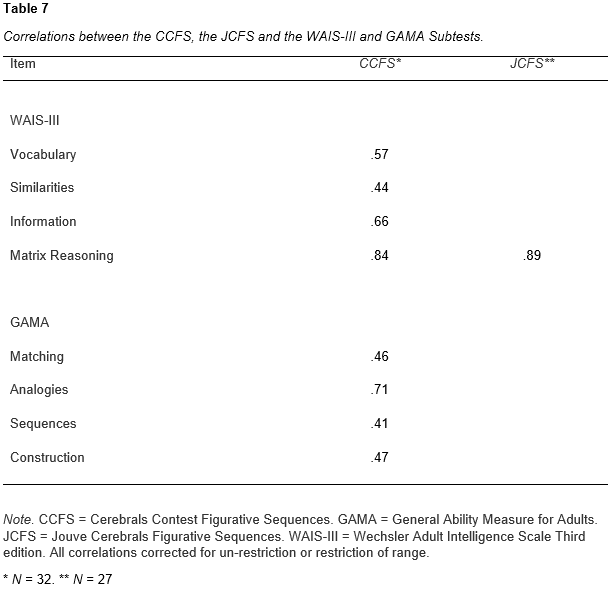

To establish concurrent validity, all correlations presented in this section were corrected for un-restriction or restriction of range to a fair estimate of the relationship between the CCFS or the JCFS, and criterion tests, with a standard deviation of 3 as set for general population normalization of these measures. The equation used was Thorndike (1949). Regarding the interpretation of the correlation magnitudes, we relied on Cohen (1988). All correlations are given in Table 7.

|

|---|

Cerebrals Contest Figurative Sequences (CCFS).

First, correlations between the CCFS and the GAMA were computed using data from a sample of 32 individuals. Results revealed a moderate correlation between the CCFS and the GAMA Matching subtest (r = .46). A strong correlation was observed between the CCFS and the GAMA Analogies subtest (r = .71), while the correlations with the GAMA Sequences subtest (r = .41) and the GAMA Construction subtest (r = .47) were moderate.

Second, correlations between the CCFS and the WAIS-III were also calculated using data from the same sample of 32 participants. The analysis showed a moderate correlation between the CCFS and the WAIS-III Vocabulary subtest (r = .57) and the WAIS-III Similarities subtest (r = .44). A strong correlation was found between the CCFS and the WAIS-III Information subtest (r = .66) and a very strong correlation with the WAIS-III Matrix Reasoning subtest (r = .84).

Jouve Cerebrals Figurative Sequences (JCFS).

The correlation between the JCFS and the WAIS-III Matrix Reasoning subtest was calculated using data from a sample of 27 individuals. The results demonstrated a very strong correlation between the two tests (r = .89).

In summary, the concurrent validity analyses revealed moderate to very strong correlations between the CCFS or the JCFS and the criterion tests, specifically the GAMA and the WAIS-III. These findings suggest that the JCFS possesses adequate concurrent validity as a measure of nonverbal ability. The strongest correlations were observed with the WAIS-III Matrix Reasoning subtest (r = .89) and the GAMA Analogies subtest (r = .71), providing evidence that the JCFS may be particularly useful in assessing nonverbal reasoning and problem-solving abilities.

Discussion

The present study examined the psychometric properties of the Jouve Cerebrals Figurative Sequences (JCFS) and its first half, the Cerebrals Contest Figurative Sequences (CCFS), as measures of nonverbal cognitive ability. The results of the study provided support for the internal consistency and concurrent validity of the test. The following sections will discuss the results in the context of the research hypotheses and previous research in the field, as well as their implications and limitations.

Internal Consistency

The internal consistency analysis of the CCFS revealed a Cronbach's alpha of .927, indicating strong reliability of the test items. The IRT analysis also showed good fit statistics for all items, indicating that the items were well-suited for measuring the intended construct. The JCFS exhibited high internal consistency, with a Spearman-Brown prophecy of .961 and a Cronbach's Alpha of .948, indicating that the test items were reliable and consistent.

The item-level statistics of the JCFS provided insight into the discriminating ability and difficulty level of each item. The polyserial correlations of several items were particularly strong, suggesting that these items are particularly effective at assessing the intended construct. The slope and threshold values revealed a range of difficulty levels and item discrimination, allowing for researchers to fine-tune the instrument for better measurement of the underlying construct.

Concurrent Validity

The concurrent validity analysis showed moderate to very strong correlations between the CCFS or the JCFS and the criterion tests, specifically subtests of the GAMA and the WAIS-III. These findings suggest that the JCFS possesses adequate concurrent validity as a measure of nonverbal ability. The strongest correlations were observed with the WAIS-III Matrix Reasoning subtest (r = .89) and the GAMA Analogies subtest (r = .71), providing evidence that the JCFS may be particularly useful in assessing nonverbal reasoning and problem-solving abilities.

Implications for Theory and Practice

The findings of the present study have implications for both theory and practice. The strong internal consistency and concurrent validity of the JCFS suggest that this test is a reliable and valid measure of nonverbal cognitive ability. These findings are important for researchers who are interested in using the JCFS in future studies, as it provides evidence of its psychometric properties.

The results of the concurrent validity analysis also suggest that the JCFS may be particularly useful in assessing nonverbal reasoning and problem-solving abilities. These findings have implications for practitioners who may use it to evaluate nonverbal cognitive ability in a clinical or educational setting. The use of the JCFS may be particularly useful in identifying individuals who are, for instance, gifted in nonverbal reasoning, as it demonstrated a strong correlation with the WAIS-III Matrix Reasoning subtest.

Limitations

The limitations of the present study include the lack of demographic information about the participants, which could have provided insight into the generalizability of the results to different populations (Lavrakas, 2008). Additionally, the study did not include a measure of general cognitive ability, which could have provided additional information about the relationship between nonverbal cognitive ability, as measured by the JCFS, and overall cognitive functioning (Neisser et al., 1996). Finally, the correlational design used in the study limits the ability to draw causal conclusions about the relationship between the variables (Babbie, 2016; Creswell & Creswell, 2017).

Future Research

Future research should aim to address the limitations of the present study. Demographic information should be collected and analyzed to determine whether the results are generalizable to different populations. Including information on participants' age, gender, ethnicity, and educational level can help researchers determine whether the JCFS is appropriate for use with different groups. Moreover, future studies should aim to replicate the results of this study using larger and more diverse samples.

Another limitation of this study is that the CCFS accounts for only half of the JCFS, which may hinder the generalization of results to the whole JCFS. However, it is crucial to bear in mind that this study utilized the CCFS as part of the JCFS, and henceforth, only the JCFS will be utilized in future research. Therefore, future research should investigate the entire JCFS's psychometric properties, including its internal consistency and concurrent validity with other established cognitive ability measures.

Additionally, the present study did not examine the test-retest reliability of the JCFS. Test-retest reliability refers to the consistency of test scores over time and is an important aspect of test reliability (Nunnaly & Bernstein, 1994). Future research should therefore investigate the test-retest reliability of the JCFS to determine whether this measure produces consistent results over time.

Finally, the current study focused on evaluating the internal consistency and concurrent validity of the JCFS, but it did not investigate the predictive validity of this measure . Therefore, it would be beneficial for future research to explore whether performance on the JCFS is predictive of significant outcomes, such as academic achievement, job performance, or quality of life (Poropat, 2009). Examining predictive validity would provide insight into the extent to which the JCFS can be utilized as a reliable tool for decision-making and prediction of future outcomes (Schmidt & Hunter, 2016).

The present study provides evidence for the strong internal consistency and concurrent validity of the JCFS and, even of the CCFS, as measures of nonverbal cognitive ability. The results of this study support the use of the JCFS in clinical and research settings, particularly for the assessment of nonverbal reasoning and problem-solving abilities. However, further research is needed to determine the generalizability of these results to different populations, to examine the test-retest reliability, and to investigate predictive validity.

It is important to note that the JCFS is intended to assess only a specific aspect of cognitive ability and should not be used as the sole basis for making decisions about an individual's cognitive abilities or potential. Rather, it should be used in conjunction with other measures and assessments to provide a more comprehensive understanding of an individual's cognitive strengths and weaknesses.

Conclusion

The present study provided valuable insights into the psychometric properties of the JCFS as a measure of nonverbal cognitive ability. The results indicated strong internal consistency and concurrent validity of the JCFS and CCFS. The study also revealed that the JCFS is particularly useful in assessing nonverbal reasoning and problem-solving abilities. The findings of the study have important implications for both theory and practice, providing evidence for the reliability and validity of the JCFS and its potential use in clinical and research settings.

Despite the study's strengths, limitations were noted, including the lack of demographic information, the absence of a measure of general cognitive ability, and the correlational design used. Future research should aim to address these limitations, particularly investigating the generalizability of the results to different populations, the test-retest reliability of the JCFS, and its predictive validity. Such research can provide a more comprehensive understanding of the psychometric properties of the JCFS and its usefulness in decision-making and prediction of future outcomes.

Overall, the present study supports the use of the JCFS as a reliable tool for assessing nonverbal cognitive ability. It is essential to use the JCFS in conjunction with other measures and assessments to provide a more comprehensive evaluation of an individual's cognitive strengths and weaknesses. The JCFS is an significant addition to the set of tools available to clinicians and researchers interested in evaluating nonverbal cognitive ability, which can ultimately lead to better decision-making and outcomes.

References

American Psychological Association. (2017). Publication manual of the American Psychological Association (6th ed.). Washington, DC: Author.

Babbie, E. R. (2016). The basics of social research (7th ed.). Boston, MA: Cengage Learning.

Baker, F. B. (2001). The basics of item response theory (2nd ed.). College Park, MD: ERIC Clearinghouse on Assessment and Evaluation.

Bracken, B. A., & McCallum, R. S. (1998). Universal Nonverbal Intelligence Test: Examiner's manual. Itasca, IL: Riverside Publishing.

Cohen, L., Manion, L., & Morrison, K. (2013). Research Methods in Education (7th ed.). Milton Park, UK: Routledge. https://doi.org/10.4324/9780203720967

Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd ed.). Hillsdale, NJ: Lawrence Erlbaum Associates.

Cronbach, L. J. (1951). Coefficient alpha and the internal structure of tests. Psychometrika, 16(3), 297-334. https://doi.org/10.1007/BF02310555

Creswell, J.W., & Creswell, J.D. (2017). Research design: Qualitative, quantitative, and mixed methods approaches (5th ed.). Thousand Oaks, CA: SAGE Publications.

de Ayala, R. J. (2009). The theory and practice of item response theory. New York: Guilford Press. https://doi.org/10.1007/s11336-010-9179-z

Durant, K., Peña, E., Peña, A., Bedore, L. M., & Muñoz, M. R. (2019). Not All Nonverbal Tasks Are Equally Nonverbal: Comparing Two Tasks in Bilingual Kindergartners With and Without Developmental Language Disorder. Journal of speech, language, and hearing research: JSLHR, 62(9), 3462–3469. https://doi.org/10.1044/2019_JSLHR-L-18-0331

Eisinga, R., Grotenhuis, M. T., & Pelzer, B. (2013). The reliability of a two-item scale: Pearson, Cronbach, or Spearman-Brown? International Journal of Public Health, 58(4), 637-642. https://doi.org/10.1007/s00038-012-0416-3

Embretson, S. E., & Reise, S. P. (2000). Item response theory for psychologists. Mahwah, NJ: Lawrence Erlbaum Associates. https://doi.org/10.4324/9781410605269

Hambleton, R. K., & Swaminathan, H. (1985). Item response theory: Principles and applications. New York: Kluwer.

Hunt, E. (1995). The role of intelligence in modern society. American Scientist, 93(2), 128-135.

Imbo, I., & LeFevre, J. A. (2009). The role of phonological and visual working memory in complex arithmetic for Chinese- and Canadian-educated adults. Memory & Cognition, 37(2), 176-185. https://doi.org/10.3758/mc.38.2.176

Kaufman, A. S., & Kaufman, N. L. (2004). Kaufman Assessment Battery for Children, Second Edition (KABC-II). Circle Pines, MN: American Guidance Service.

Kaufman, A. S., & Lichtenberger, E. O. (2006). Assessment essentials of the Kaufman Adolescent and Adult Intelligence Test. Hoboken, NJ: John Wiley & Sons.

Kyllonen, P. C., & Christal, R. E. (1990). Reasoning ability is (little more than) working-memory capacity?! Intelligence, 14(4), 389–433. https://doi.org/10.1016/S0160-2896(05)80012-1

Lavrakas, P.J. (2008). Encyclopedia of survey research methods. Thousand Oaks, CA: SAGE Publications.

Lubinski, D. (2010). Spatial ability and STEM: A sleeping giant for talent identification and development. Personality and Individual Differences, 49(4), 344-351. https://doi.org/10.1016/j.paid.2010.03.022

Mix, K. S., Cheng, Y. L., Hambrick, D. Z., Levine, S. C., & Young, C. (2016). Separate but correlated: The latent structure of space and mathematics across development. Journal of Experimental Psychology: General, 145(9), 1206-1227. https://doi.org/10.1037/xge0000182

Naglieri, J. A., & Bardos, A. N. (1997). General Ability Measure for Adults (GAMA). Minneapolis, MN: National Computer Systems.

Naglieri, J. A., & Otero, T. M. (2018). The Cognitive Assessment System—Second Edition: From theory to practice. In D. P. Flanagan & E. M. McDonough (Eds.), Contemporary intellectual assessment: Theories, tests, and issues (pp. 452–485). The Guilford Press.

Neisser, U., Boodoo, G., Bouchard, T. J., Jr., Boykin, A. W., Brody, N., Ceci, S. J., Halpern, D. F., Loehlin, J. C., Perloff, R., Sternberg, R. J., & Urbina, S. (1996). Intelligence: Knowns and unknowns. American Psychologist, 51(2), 77–101. https://doi.org/10.1037/0003-066X.51.2.77

Nunnally, J. C., & Bernstein, I. H. (1994). Psychometric theory (3rd ed.). New York: McGraw-Hill.

Olsson, U. H. (1979). Maximal likelihood estimation of the polychoric correlation coefficient. Psychometrika, 44(4), 443-460. https://doi.org/10.1007/BF02296207

Poropat, A. E. (2009). A meta-analysis of the five-factor model of personality and academic performance. Psychological Bulletin, 135(2), 322–338. https://doi.org/10.1037/a0014996

Raven, J. C., Court, J. H., & Raven, J. (1983). Manual for Raven's Progressive Matrices and Vocabulary Scales. London, UK: H. K. Lewis.

Raven, J. C. (1938). Progressive Matrices: A perceptual test of intelligence. Individual Forms A and B. London, UK: H. K. Lewis.

Roid, G. H., & Miller, L. J. (2013). Leiter International Performance Scale, Third Edition (Leiter-3). Wood Dale, IL: Stoelting Co.

Rushton, J. P., & Jensen, A. R. (2010). The rise and fall of the Flynn effect as a reason to expect a narrowing of the Black–White IQ gap [Editorial]. Intelligence, 38(2), 213–219. https://doi.org/10.1016/j.intell.2009.12.002

Sackett, P. R., Schmitt, N., Ellingson, J. E., & Kabin, M. B. (2001). High stakes testing in employment, credentialing, and higher education: Prospects in a post-affirmative-action world. American Psychologist, 56(4), 302–318. https://doi.org/10.1037/0003-066X.56.4.302

Schmidt, F.L. and Hunter, J.E. (1998). The Validity and Utility of Selection Methods in Personnel Psychology: Practical and Theoretical Implications of 85 Years of Research Findings. Psychological Bulletin, 124, 262-274. http://dx.doi.org/10.1037/0033-2909.124.2.262

Schneider, W. J., & McGrew, K. S. (2012). The Cattell-Horn-Carroll model of intelligence. In D. P. Flanagan & P. L. Harrison (Eds.), Contemporary intellectual assessment: Theories, tests, and issues (pp. 99-144). New York, NY: Guilford Press. https://doi.org/10.4135/9781506326139

Spielberger, C. D., Vagg, P.R. (1995). Test Anxiety: Theory, Assessment, and Treatment. Washington DC: Taylor & Francis.

Sireci, S. G., & Geisinger, K. F. (1992). Analyzing test content using cluster analysis and multidimensional scaling. Applied Psychological Measurement, 16(1), 17-31. https://doi.org/10.1177/014662169201600102

Spielberger, C. D., & Vagg, P.R. (1995). Test Anxiety: Theory, Assessment, and Treatment. Washington DC: Taylor & Francis.

Sternberg, R.J. & Grigorenko, E.L. (2002). Dynamic Testing: the nature and measurement of learning potential. New York: Cambridge University Press.

Sternberg, R. J. (2003). Wisdom, intelligence, and creativity synthesized. New York: Cambridge University Press. https://doi.org/10.1017/CBO9780511509612

Tavakol, M., & Dennick, R. (2011). Making sense of Cronbach's alpha. International Journal of Medical Education, 2, 53-55. http://dx.doi.org/10.5116/ijme.4dfb.8dfd

Uttal, D. H., Meadow, N. G., Tipton, E., Hand, L. L., Alden, A. R., Warren, C., & Newcombe, N. S. (2013). The malleability of spatial skills: A meta-analysis of training studies. Psychological Bulletin, 139(2), 352-402. https://doi.org/10.1037/a0028446

Wechsler, D. (1997). Wechsler Adult Intelligence Scale - Third Edition (WAIS-III). San Antonio, TX: The Psychological Corporation.

Wechsler, D., & Naglieri, J. A. (2006). Wechsler Nonverbal Scale of Ability (WNV). San Antonio, TX: The Psychological Corporation.